The $100M Dance Between Humans & Robots: DeepSeek vs. OpenAI Training

+ Slides & quizzes of my talk "AI Training Techniques & Architecture" + FREE access to my video course

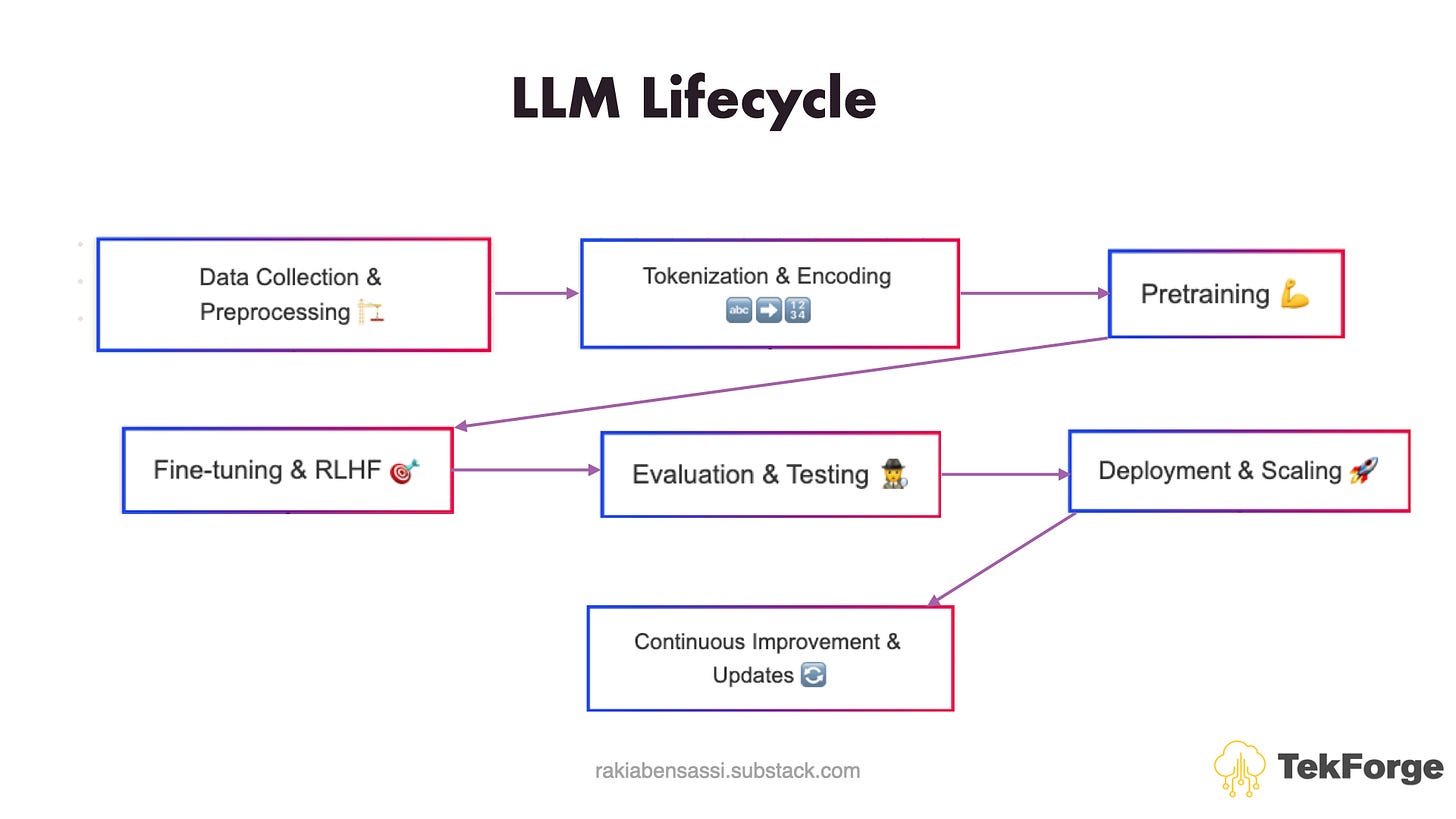

AI training isn’t magic — it’s a meticulously choreographed sequence. In such a training, balancing human ingenuity with automated scale is the ultimate dance.

DeepSeek’s playlist is lean; OpenAI’s is a symphony. Both get the job done, but one burns a bigger hole in your pocket. DeepSeek R1 is making waves: it’s lean, efficient, and doesn’t throw money at every problem.

But does that make it better?

Let’s break down AI models’ training techniques, human vs. automated efforts, and most importantly — why DeepSeek’s approach is shaking things up.

Free Quizzes & Slides

Before we get started, let me share some news with you.

I had an amazing time, last Sunday, talking 🎙️ about AI Training Techniques & LLMs Architecture at the Devناس podcast!

And the Quizzes:

You can also watch the full video in Arabic & English

DeepSeek R1’s Cost-Cutting Hacks

The DeepSeek model relies on Knowledge Distillation, Sparse Training & Mixture-of-Experts (MoE), and Pruning.

These methods prioritize efficiency, cutting down on human labor and compute costs while maintaining competitive performance.

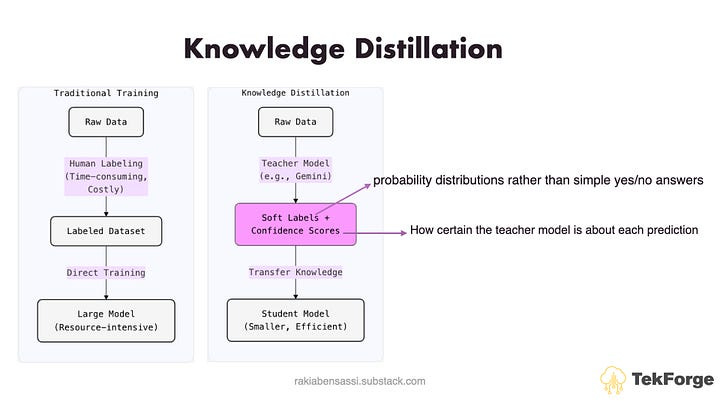

Knowledge Distillation

Human Input: In this practice, engineers set up the “teacher-student” model framework — large model trains a smaller one.

Automation: The smaller model learns automatically by mimicking the larger model’s outputs.

It’s like learning guitar from YouTube instead of hiring an instructor — cheaper, but effective.

Sparse Training & Mixture-of-Experts (MoE)

Human Input: Engineers design sparse models where only necessary parts activate at a time.

Automation: During training, only essential subnetworks run, which slashes compute costs.

It’s like using only the muscles you need for a workout instead of a full-body burn.

Reusing Pre-Trained Models

Human Input: Engineers adapt existing models (e.g., BERT, GPT-2, GPT-3) instead of starting from scratch.

Automation: Fine-tuning on smaller, specialized datasets takes minimal effort.

It’s like modifying an old house instead of building a new one from the ground up.

Pruning & Quantization

Human Input: Engineers set thresholds for trimming unnecessary parts of the model.

Automation: Algorithms automatically reduce redundant neurons and compress models.

It’s like getting a tailored suit instead of buying off-the-rack — efficient, with no excess.

OpenAI’s Training Playbook: Precision at a Price

GPT, OpenAI’s model, is trained using a mix of techniques, including Reinforcement Learning from Human Feedback (RLHF), Data Curation & Filtering, Hyperparameter Tuning, and Fine-Tuning.

These processes involve a careful balance of human expertise and automation, but that balance comes at a cost.

Reinforcement Learning from Human Feedback (RLHF)

The Human Input is the backbone of OpenAI’s approach. Humans meticulously rank model outputs based on quality, relevance, and helpfulness. This feedback trains a reward model that guides AI through reinforcement learning.

Automation: Once the training loop kicks in, AI refines itself with reinforcement learning, adjusting weights based on human-ranked feedback.

Cheap Human Data Labelers: RLHF relies on a large workforce of data labelers — human annotators labeling and ranking responses. These workers, often outsourced, process millions of data points.

Data Curation & Filtering

Human Input: In this practice, experts manually curate datasets, filter toxicity, and ensure quality. Bias? Humans step in to mitigate it.

Automation: AI classifiers and regex scripts assist in filtering bulk data, but human oversight remains necessary.

Cheap Human Data Labelers: Data cleaning and filtering also require human reviewers (data labelers), particularly in large-scale text-based datasets. These tasks involve tens of thousands of human labelers reviewing millions of text snippets for accuracy, bias, and safety.

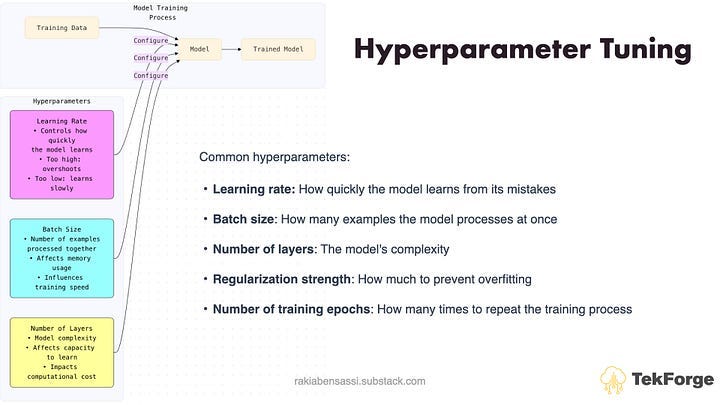

Hyperparameter Tuning

Human Input: Experts select initial values for learning rates and batch sizes based on intuition.

Automation: AI-driven techniques like Bayesian optimization tweak hyperparameters for efficiency.

Fine-Tuning

Human Input: Labeled datasets (e.g., medical QA) require human annotators to ensure accuracy.

Automation: AI adjusts weights based on human-provided examples.

Cheap Human Data Labelers: Fine-tuning demands a substantial workforce of annotators, particularly for domain-specific applications. Depending on the complexity, thousands of labelers may be involved over several months, working in teams to process specialized training data.

Who Wins?

If you want the Rolls-Royce of AI — meticulously crafted with human touch — OpenAI is your pick. But if you’re looking for a Tesla — efficient, smart, and cost-effective — DeepSeek’s got your back.

The real question?

Are you willing to pay premium prices for nuanced responses, or do you just need a fast, reliable AI that doesn’t break the bank?

Either way, the AI race is heating up, and your choice got much more interesting.

Further Reading and Viewing

📖 How to Transform Your Boring Personal Stories Into Engaging Ones?

📖 Behind the Scenes: How I Turn My Stories Into YouTube Videos

🎥 Behind the Scenes: How I Turn My Stories Into YouTube Videos

🎁 Special Gift for You

I’ve got a couple of great offers to help you go even deeper. Discount & free access to my video courses - available for a limited time, so don’t wait too long!

🔥 Secure Software Development

FREE coupon 4206BFE9C0194E8C4C0DFREE coupon 498C386CF0EC5658D15C

🐳 Getting Started with Docker & Kubernetes + Hands-On

FREE coupon B880473C053745E23792💯 Modern Software Engineering: Architecture, Cloud & Security

Discount coupon 4FFCC6523EE1622AE0EA⚡ Master Web Performance: From Novice to Expert

Discount coupon 05FAB943B30B2B3FC710

Until next time—stay curious and keep learning!

Best,

Rakia

Want more?

💡 🧠 I share content about engineering, technology, and leadership for a community of smart, curious people. For more insights and tech updates, join my newsletter and subscribe to my YouTube channel.